Interpreting Kappa in Observational Research: Baserate Matters Cornelia Taylor Bruckner Vanderbilt University. - ppt download

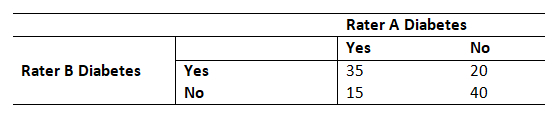

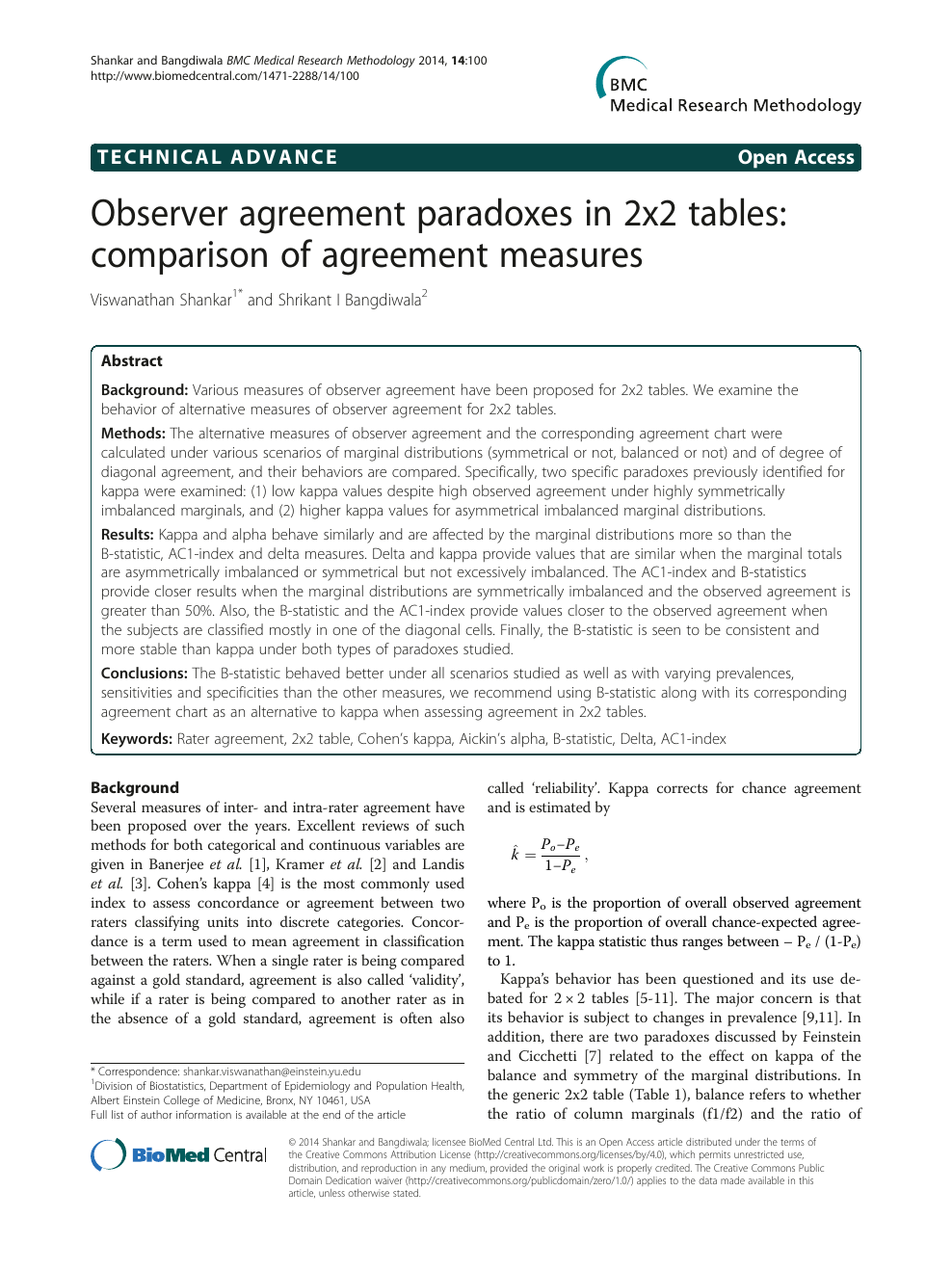

Observer agreement paradoxes in 2x2 tables: comparison of agreement measures – topic of research paper in Veterinary science. Download scholarly article PDF and read for free on CyberLeninka open science hub.

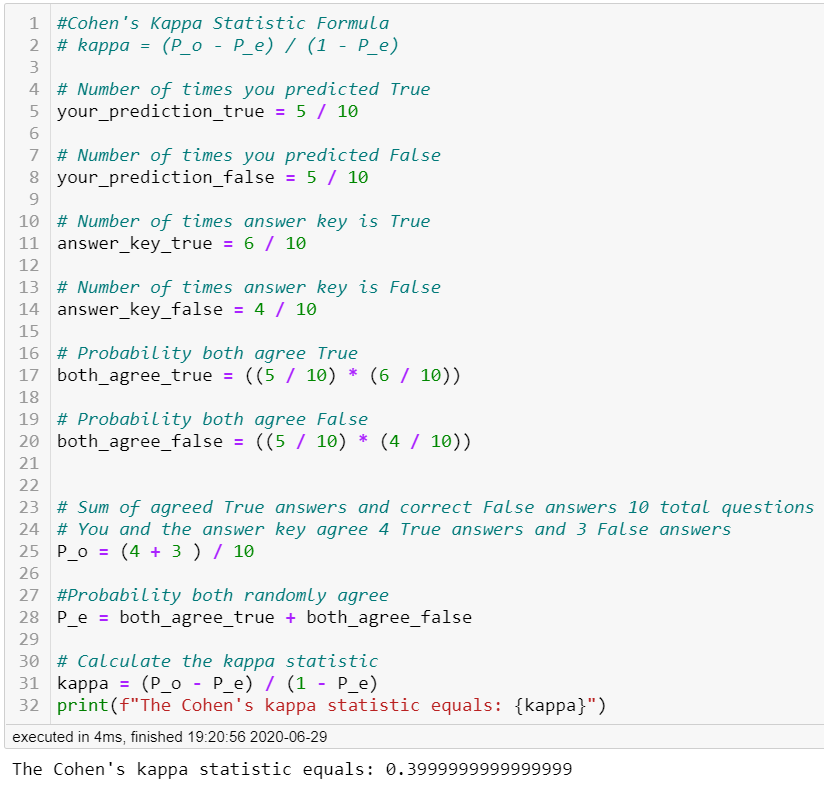

Systematic literature reviews in software engineering—enhancement of the study selection process using Cohen's Kappa statistic - ScienceDirect

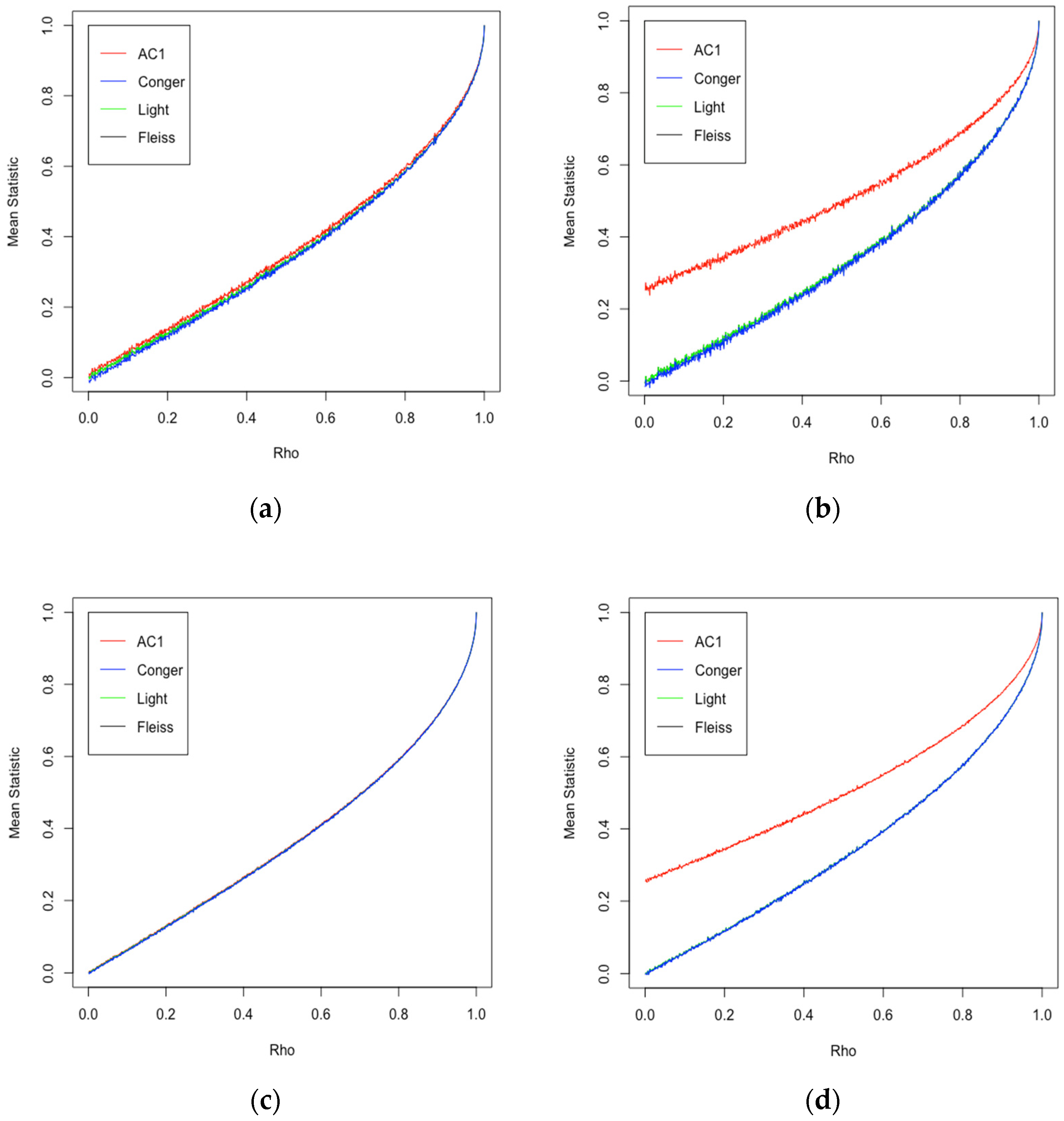

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters | HTML

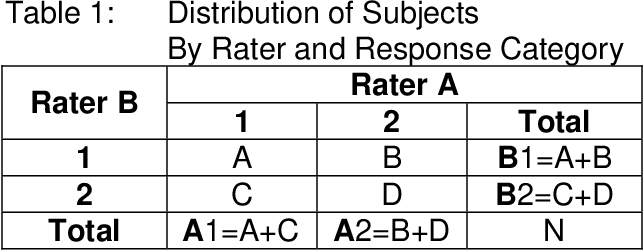

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

Kappa Statistic is not Satisfactory for Assessing the Extent of Agreement Between Raters | Semantic Scholar

Clorthax's Paradox Party Badge + Summer Sale Trading Cards & Badge | Steam 3000 Summer Sale Tutorial - YouTube

Kappa Statistic is not Satisfactory for Assessing the Extent of Agreement Between Raters | Semantic Scholar

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag